Secrets Of Successful Web Scraping

Web scraping, occasionally referred to as web harvesting or web data extraction, is used for gathering specific data from websites, usually with the help of automated software.

This technique was designed with a view to simplify research by structuring data from multiple sources into a single asset that can later be analyzed and exploited.

Among all the web scraping methods, professionals tend to distinguish a combination of programming languages and web scraping software. As of today it is considered to be the most effective course of action in terms of flexibility and scalability.

With the help of our article you will learn to determine which web scraping tool suits your needs best. Besides, prepare to receive a couple of useful recommendations for successful realization of your scraping project.

How To Choose Web-Scraping Software

It is advised to keep in mind several core factors in order to choose a tool that meets one’s requirements perfectly. No need to worry though, these aspects are explained in a coherent manner in the following paragraphs.

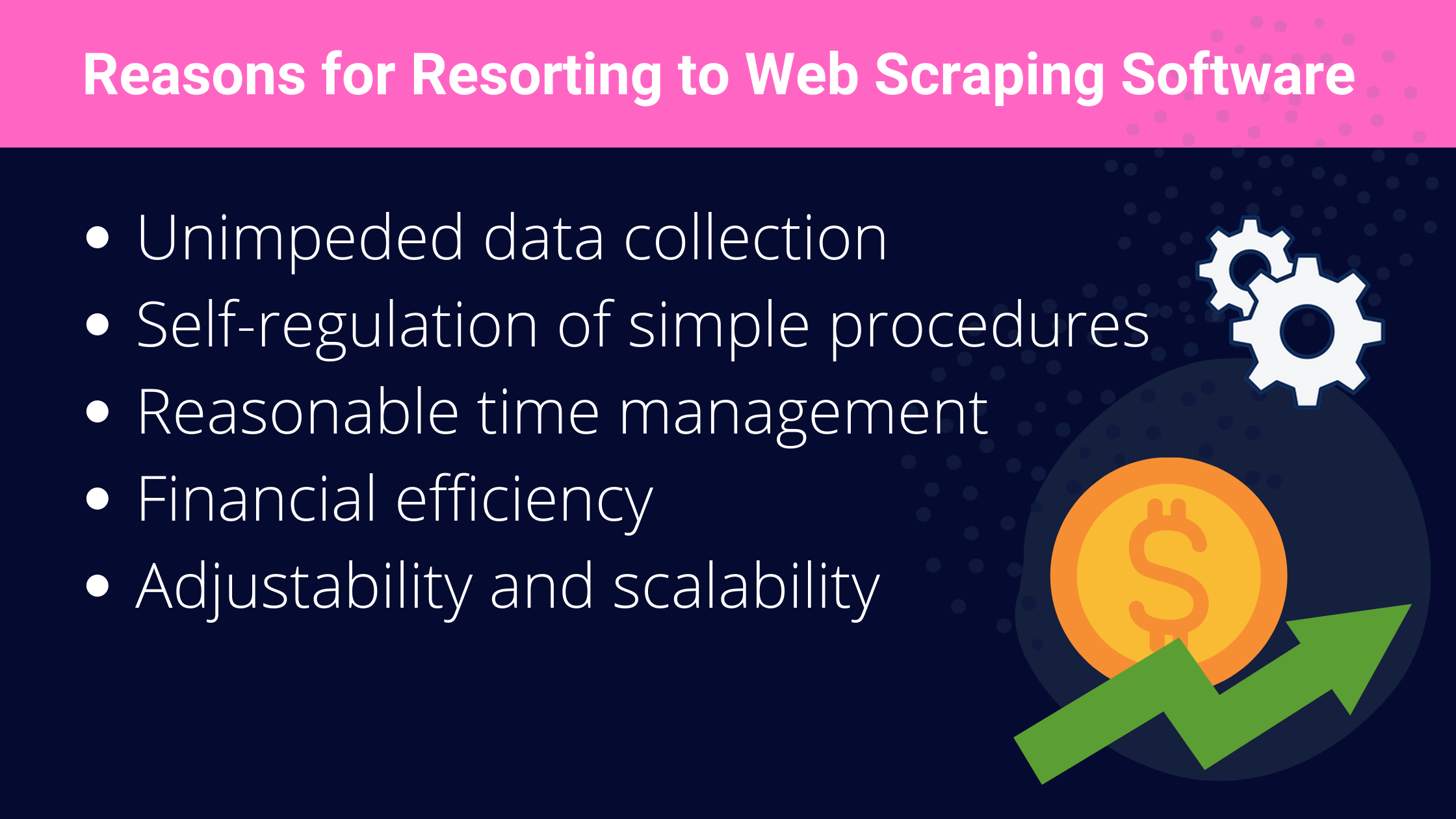

Reasons for Web Scraping Software Usage

It is not impossible to build a web scraper on your own. It will, however, require unnecessarily complex and time-consuming procedures, thus rendering your efforts inefficient.

For instance, a web scraping software will automatically send an HTTP request to a server and create a parser to navigate a DOM without manual intervention, saving the user's time.

Therefore, in order to determine which web scraping solution suits you best, make sure the software has the following features:

- Unimpeded data collection. Effectiveness of a web scraper depends directly on your chosen method of data extraction. The tool of your choice has to simplify and accelerate the process. Make sure that its parsing capability is sufficient enough to work with JSON and CSV files.

- Self-regulation of simple procedures. Your web scraper tool absolutely has to overcome bans and restrictions without human intervention. Otherwise, the whole project might be pretty useless. It needs to be capable of handling CAPTCHAs and IP rotations, leaving only simple work for you.

- Reasonable time management. Users must be pleased with the speed of information acquisition, performed by the selected software. One of its principal tasks is to manage various limitations, such as time-outs, and to extract helpful information as swiftly and accurately as possible.

- Financial efficiency. In order to guarantee success for your project, you’ll need to make sure that the effectiveness of your selected scraping tool is worth its cost. The internet is overflowing with poor-quality software, sold for unreasonable prices. We suggest you choose reliable propositions to ensure that results of data extraction meet both your requirements and financial capabilities.

- Adjustability and scalability. All internet resources have specific features that must be taken into account by your scraping software. A good scraping tool will be able to scale and adjust to any architecture. If you’re not receiving the outcome you were expecting, your selected software may lack some much needed flexibility.

Combining Web Scraping Software with Programming Languages

Scraping software will deliver more valuable output while combined with your usual skills and tools.

If your project’s success depends directly on your performance then it might not be the best solution to begin learning a whole new set of languages and frameworks.

Alternatively, it makes more sense to focus on your already strong skills and proficiencies, relying on external tools for reaching the best possible results.

Therefore, it is best to ensure that your chosen web scraping software is aligned with your current objectives, enhances your skills and expands future opportunities. In this manner, its implementation shall be beneficial and will complement your achievements.

For instance, you will need to come up with different solutions for JavaScript or C# based projects. For this purpose we provide you with our selection of web scraping software, so that you don’t encounter any difficulties with the usage of different coding languages, regardless of your project’s complexity.

7 Best Programs For Web Scraping Available in 2022

Feel free to try out these solutions in whatever order you see fit to determine which one matches your needs better than most. All of them are capable of performing the necessary tasks.

We hereby present our compilation of software possibilities, elaborating on their functionality and privileges.

1. RocketScrape

RocketScrape API is a professional software that helps to scrape HTML or JSON from any webpage using a single API request. It is a reliable, fast web scraping API that will handle all the proxies, CAPTCHAs, browsers, and geolocation issues, so that you can focus entirely on data collection.

RocketScrape covers numerous modes of application, such as customer reviews, job & hiring data, price & product information for ecommerce field, real estate data, sales leads and other common cases of web scraping.

It supports Javascript rendering, which means it can render web pages as if it were a real browser. At the same time it is capable of managing thousands of headless instances using the latest Chrome version.

All the described advantages allow users to focus on extracting the necessary data, without having to deal with concurrent headless browsers that consume RAM and CPU significantly.

Besides, it supports all programming languages, which is an extremely valuable feature, as data can be simply retrieved by any HTTP client.

Major benefits of RocketScrape :

It gives you an option to choose your proxy geolocation for obtaining localized content;

It enables real-time usage monitoring on a well-designed dashboard;

It has high concurrency rates, which users can set and monitor, even with a free version!;

It always provides enough performance, regardless of the amount of submitted requests or volume of data fragments.

Keep in mind that a strong competitive advantage of RocketScrape is their generous free plan. They offer 5000 API requests a month for free. And if you need more possibilities, an appealing lifetime deal is also on the table.

Moreover, RocketScrape integrates seamlessly with other tools presented in this article. All you have to do is add an extra line of code to send the request through RocketScrape servers and let them handle the rest.

Don’t linger any longer and start powerful web scraping today!

2. Scrapy

Scrapy would be a good choice if you’re working with Python coding language.

This framework was designed specifically for web scraping in Python. This open-source software is capable of building “spiders”, otherwise known as highly efficient scrapers, with the help of Python’s syntax.

The biggest advantage of this tool is built-in support for CSS and XPath selectors, which permits the user to collect vast amounts of data with no more than a few lines of code.

A distinctive feature of Scrapy is Scrapy Shell – an interactive service that can be used for testing CSS and XPath expressions. It is very useful as opposed to running other scripts to test every change.

You may even entrust Scrapy to export the whole database into structured data frameworks (such as CSV, JSON, or XML), and it will complete the task without manual intervention.

But if you really want to achieve the best results, consider using Scrapy along with RocketScrape. In this manner, Scrapy will guarantee simultaneous maintenance of several web scrapers (or “spiders”) from the same file and RocketScrape will take care of anti-scraping measures, making your efforts much more efficient.

3. Use a coding language

It is no secret that among all the coding languages JavaScript takes a special place, due to its widespread usage. It is defined by a rather broad and diverse application, since developers can build almost anything with it, including web scrapers.

Main features:

- Its hallmark is Node.js library, a back-end runtime environment which allows the creation of programs with JavaScript programming language.

- It has the ability to parse HTML/XML files that use CSS and XPath expressions with JQuery-like syntax. And in order to scrape static pages Cheerio can initiate lighting fast scripts.

- Cheerio is capable of scraping data swiftly, compared to other software since it doesn’t load external resources, execute JavaScript, produce a visual rendering or apply CSS. But you shouldn’t write it off for that reason, because your main objective while using Cheerio is to save as much time as possible, and these resources are really not required for scraping static pages.

However, on some occasions, you may need to scrape a dynamic page that needs to execute JS before the content loads. That's when RocketScrape comes along! Its renderer provides extra functions to the Cheerio scraping tool.

4. Puppeteer

Alternatively, you can use Puppeteer to work with Node.js library. This scraping tool is able to imitate the behavior of a regular browser from our script, thanks to its ability to control a headless chromnium browser.

Similarly to previous software, Puppeteer is better suited for scraping dynamic pages using Node.js. However it is unlikely that you will need it when you’re already using Cheerio and RocketScrape. Though it should be noted that RocketScrape does not interact with the websites and can only execute JS scripts.

In some cases the software needs to mimic human-like actions, such as clicking, scrolling, or filling a form. And this is when Puppeteer comes along using imitation to access and extract the necessary data.

Please note that, in order to work productively with this library, the script must be written using Async and Await.

In such a way you will easily design a successful web scraper for a challenging project, assuming you take advantage of all the beneficial features presented by different software (notably headless browser manipulation by Puppeteer, parsing proficiency of Cheerio and RocketScrape’s special properties).

5. ScrapySharp

There is no denying that C# is one of the most sufficient and adjustable coding languages. Considering that its wide application is constantly enhanced, especially in the professional domain, it is no surprise to see web scraping software developed specifically for C# programming.

Thus, if you need to select certain elements in a HTML document using CSS and XPath selectors, a good solution would be to involve ScrapySharp C# library, which uses HTMLAgilityPack extension for achieving the best scraping results.

However, be aware that this library is not capable of manipulating a headless browser. It is much better suited for taking care of things like headers and cookies.

Luckily, RocketScrape is perfect for adding extra functionality to the library, which makes it an essential tool to use in 89% of all projects, especially in cases when an initial JavaScript execution is required.

However, if interacting with the website is necessary for data extraction, Puppeteer Sharp, which is a port for .Net of Node.js’s Puppeteer library, would be your best choice.

6. Rvest

Rvest is a software mostly recommended for those who use R as their principal coding language. This knowledge significantly simplifies working with Rvest library , since with R language users can to send HTTP requests and parse the returned DOM with the help of CSS and XPath expressions.

Besides, Rvest features include data manipulation functions, that can be used to create convenient data visualizations, that surpass alternative software in terms of style and convenience. Keep in mind that both R coding language and Rvest web scraper might be of specific interest for users, specialising in data science.

Magrittr - is another distinctive feature worth mentioning. It is typically used simultaneously with the user's main scraping library in order to use the %>% operator that permits to “pipe a value forward into an expression or function call”.

It may appear as a non-essential function, however, you will be pleasantly surprised with its ability to shorten programming time and to improve the code’s “tidiness” and elegance.

7. ScreperAPI

Last, but not the least, we present to you ScraperAPI , which prides itself on “making data accessible for everyone”.

This software tends to use 3rd party proxies, machine learning, huge browser farms, and vast experience with statistical data to help users bypass anti-scraping techniques.

Key features of this scraping tool are:

- Automated IP rotation and CAPCHAs handling;

- Ability to access geo-sensitive data with the help of simple parameters integrated to the target’s URL;

- JavaScript rendering, designed to extract valuable data contained behind a script, without using headless browsers;

- Capable of scaling to around 100 million scraped pages per month;

- Can manage concurrent threads to ensure swift work of a web scraping tool;

- Initiates multiple attempts with IPs and headers to find the perfect match with the purpose to achieve a 200 status code request;

It won’t be a problem to integrate ScraperAPI with the other tools in the list.

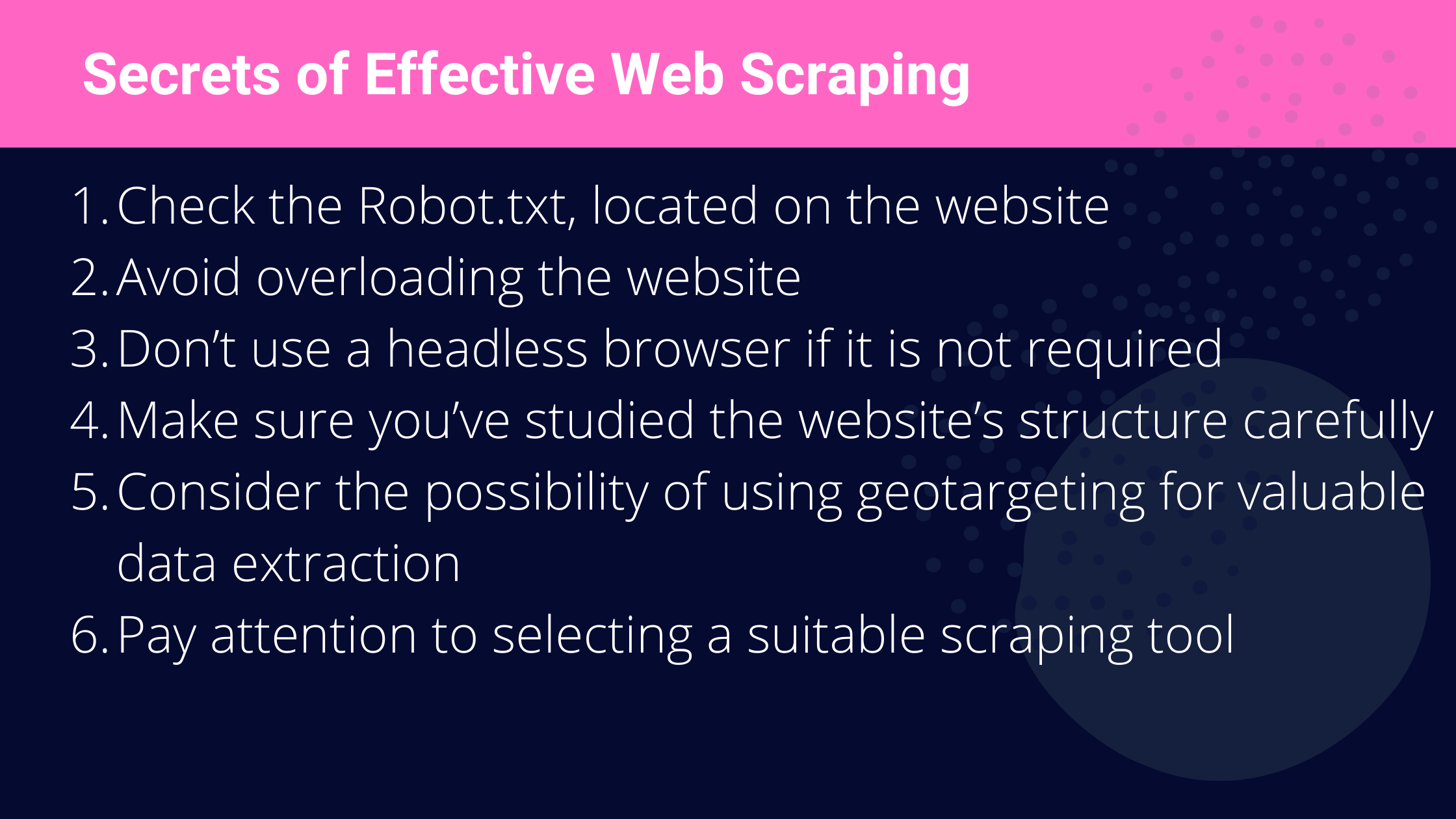

Secrets of Effective Web Scraping

Web scraping tools were initially designed to simplify and accelerate data collection, replacing manual intervention with automated tasks. However, your IP may get banned, which will seriously harm your project’s efficacy. Please, consider our main tips on how to avoid unwanted obstacles while working with web scrapers:

1. Check the Robot.txt, located on the website

Many websites have clear directives for scrapers, defined in a robot.txt file. On occasion, you will find that the website owners will specifically mention which web pages they want your scraper to access for a limited time or to avoid completely. It is advised to be respectful in regard to those guidelines and comply with them.

2. Avoid overloading the website

Don’t forget that during data extraction your scraping tool partially reserves traffic, intended for regular website users.

It might not impact your work, but if your scraper’s interaction with the website is too intensive, regular users might experience difficulties. In order to prevent that from happening most websites are programmed to detect the frequency of your requests and may blacklist your attempts for some time.

In case you encounter complications anyway, do not worry, RocketScrape has you covered. This tool uses a different proxy for each request, which will keep you protected against the website’s limitations.

Be advised that it remains within your power to deploy your scraping activity in phases, thus averting any potential harm you may cause to the website.

3. Don’t use a headless browser if it is not required

You should be aware that the more lines of code and extra features you exploit, the more valuable resources your web scraping tool will employ to execute JS scripts and condition the website to interact with it.

Unless there is a pressing need for website-scraper interaction, involvement of headless browsers will prove itself to be irrelevant.

Usually, all you need for a successful script is a good HTTP client and effective execution of parsing procedures.

Normally, one would use a headless browser to imitate human behavior, but it is not required at all if you rely on a good scraping tool.

4. Make sure you’ve studied the website’s structure carefully

Find time to examine closely all the elements of the website to determine the most efficient way to extract data.

As soon as you understand the key principles of its structure, you’ll have a clear vision on how a relevant web scraper should work.

In this sense, every website is unique and several attempts with different solutions might be necessary to achieve your goals, so tread lightly.

5. Consider the possibility of using geotargeting for valuable data extraction

Sometimes websites are showing different information, depending on the user’s geolocation. Thus, if your location parameter is not defined by a web scraping tool, the data you receive will be language- or location-specific. This means that you will have to condition how the website sees your requests for localized searches and that involves changing your IP to a foreign one (for instance, German IP for Germany-related listings).

Luckily, RocketScrape can help you with its “country_code” parameter.

Feel free to check out our API Documentation for more tips on RocketScrape capabilities.

6. Pay attention to selecting a suitable scraping tool

It is not recommended to try and grasp all the aspects of successful scraping at once. Focusing on your already existing skills and proficiencies makes more sense. Instead, try using one of the above-mentioned software that complements your knowledge of the programming language of your choice.

They certainly don't require any profound expertise. Moreover, these scraping tools are basically designed to be “low-code solutions”, allowing you to scrape any website of your choice with minimal knowledge of any language.

And for those who are new to this, the most seamless combination would probably be Scrapy, that will undertake the scraping logic, and RocketScrape to help you avoid getting blocked.

Users will likely face no obstacles while combining and executing these scraping solutions.

Types of Web Scraping Software: Automated or Code-based

There is no denying that the main controversy is whether using automated software is indeed preferable to code-based scraping tools. And yes, it might appear so in the beginning.

But in practice, it turns out that one universal solution that will satisfy all your scraping ambitions cannot exist. Meanwhile, that’s precisely what automated solutions are promising you.

The main issue here is that as soon as you begin scaling your project, you will likely be impeded in one of the following manners:

- Automated web scraping software does not scale alongside your project. In time, as your database expands and new information is added, the automated tool will be unable to adjust and sabotage the whole project.

- It might turn out to be not as flexible as you would expect. Specific goals will require specific measures and scripts, which is not covered by automatic solutions, since they are much less adjustable in the long run.

- Automated software is likely to deplete your budget. Automated solutions usually appear to be “affordable” at first, but will charge the user with more payments each time the amount of work is expanded. You will end up paying more expensive fees in comparison to code-based scraping tools like Scrapy or RocketScrape.

Certainly, automated software can be helpful while launching small projects, but such tools will become less and less efficient, should you continue evolving your project. If you’re seeking a solution that will truly meet your requirements and adjust to every website you’re interested in, code-based software is your best choice.

Besides, tested and reliable software, such as Scrapy, Cheerio or RocketScrape is undobtedly easy to master and will aid you with creating web scrapers that are perfect for you. What’s more, you can customize them to your liking and integrate with other libraries for the best results.

Feel free to reach out to us on support@rocketscrape.com in case you have any additional questions and need some guidance. You can always count on our support. Good luck!